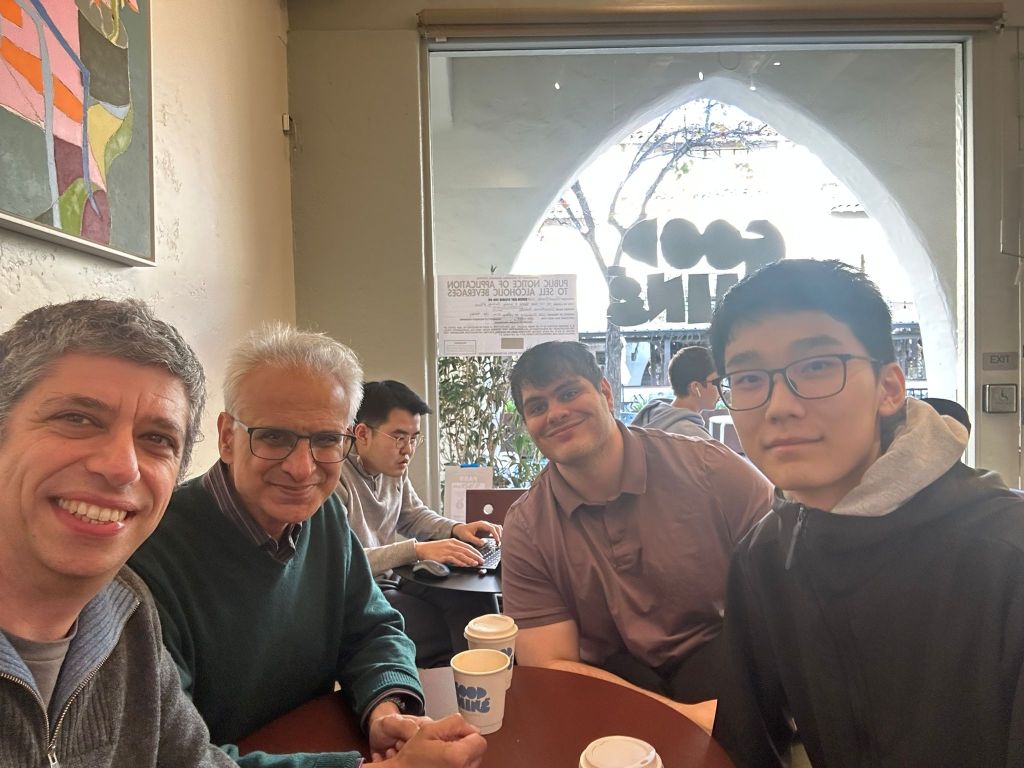

This month I took a trip to California for the FPGA 2026 conference, together with my two new PhD students Ben Zhang and Bardia Zadeh, which I combined with a number of visits in the San Francisco Bay Area in the days preceding the conference. This post provides a brief summary of my visit.

First up on our travels was dinner with Rocco Salvia. Rocco was my Research Assistant – we worked together some years ago on automating the analysis of average-case numerical behaviour of reduced-precision floating-point computation. He is now working for Zoox, the robotaxi company owned by Amazon where his first-rate engineering skills are being put to good use!

The following day we went to visit Max Willsey at UC Berkeley and his PhD student Russel Arbore. Max and I (together with others) organised a Dagstuhl workshop on e-graphs recently, and we went to pick up the research conversations we left behind a month ago in Germany and spend some good quality whiteboard time together. Russel and Max are working on some really exciting problems in program analysis.

That afternoon, we had the chance to catch up with my old friend and colleague Satnam Singh, now working for the startup harmonic.fun. Harmonic is a really exciting company, combining modern AI tools with Lean-based formal theorem proving. Expect great things here.

The following morning, we went to visit AMD, with whom I have longstanding collaborations. Amongst others, we met my two former PhD students Sam Bayliss and Erwei Wang there, and discussed our ongoing work on e-graphs and on efficient machine learning, as well as finding out the latest work in Sam’s team at AMD including their release of Triton-XDNA.

That afternoon we visited NVIDIA’s stunning HQ to meet with Rajarshi Roy and Atefeh Sohrabizadeh. I know both of them through the EPSRC International Centre-to-Centre grant I led: Rajarshi was introduced to me by Bryan Catanzaro as the author of some really interesting work on reinforcement learning for computer arithmetic design, and spoke at our EPSRC project’s annual workshop. Atefeh was a PhD student affiliated with the Centre (advised by Jason Cong, UCLA) and spent some time visiting my research group. We heard about the recent NVIDIA work on AI models to aid software engineering and of combined speech and language.

It has long been a tradition that Peter Cheung, when in the Bay Area, organises a get-together of alumni of the Circuits and Systems Group (formerly Information Engineering group, when Bob Spence was Head of Group). This time was no exception – we met up with many of our department’s former students, and some came to a great dinner too. It’s always a delight to hear about the activity of our alumni, spread across the tech companies in the Bay Area.

After a flying visit to my former PhD student’s family, we then made it down to Monterey for FPGA 2026. Regular readers of this blog will know that I’ve been attending FPGA for more than 20 years, have been Program Chair, General Chair, Finance Chair and am now Steering Committee member of the conference. So it always feels a little like “coming home”. I also love Monterey – despite the touristy bits – and am a fan of Steinbeck‘s writing in which he immortalised Monterey with some of the best opening lines ever (of Cannery Row): “Cannery Row in Monterey in California is a poem, a stink, a grating noise, a quality of light, a habit, a nostalgia, a dream.”

This year, the general chair of FPGA 2026 was Jing Li, and the great programme was put together by Grace Zgheib.

My favourite paper at FPGA this year also won the best paper prize. Duc Hoang and colleagues identified that Kolmogorov-Arnold Networks are a natural fit to the LUT-based neural networks my group pioneered e.g. [1,2]. They form a really interesting design point, overcoming the exponential scaling of area with the product of precision and neuron fanin present in both my SOTA work with Marta Andronic and earlier work like Xilinx LogicNets, to produce a design that scales exponentially only in the precision. I very much enjoyed reading this paper and seeing it presented, and I think it opens up new areas of future work in this area.

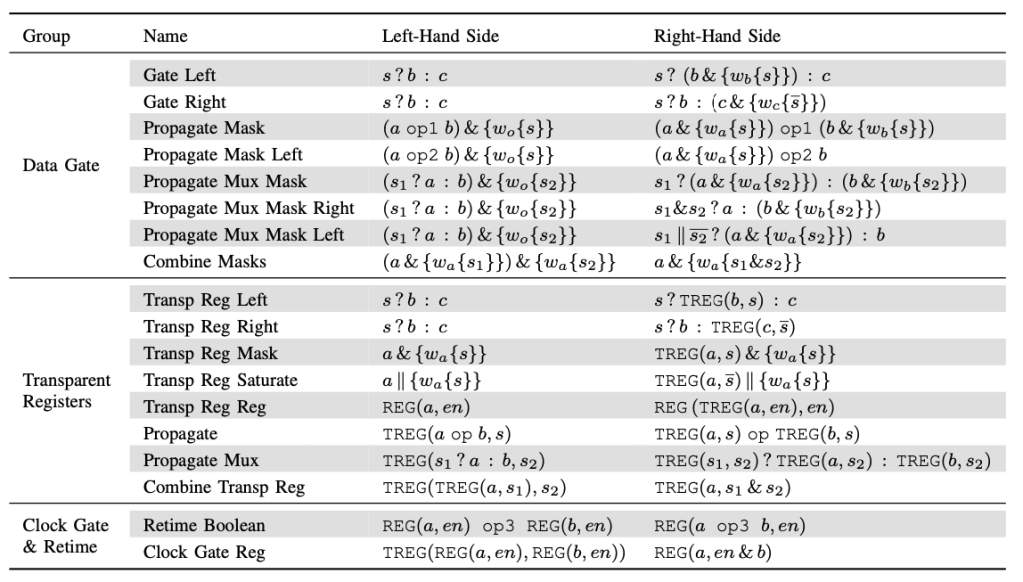

I also particularly enjoyed the work of Shun Katsumi, Emmet Murphy and Lana Josipović (ETH Zurich) on eager execution in elastic circuits. I previously collaborated with Lana on elastic circuits, and it’s great to see the latest work in this area and the use of formal verification tools to prove correctness of performance enhancements. I had a very nice discussion with Lana about possible ways to take this work further.

Rouzbeh Pirayadi, Ayatallah Elakhras, Mirjana Stojilović and Paolo Ienne (EPFL) had a really interesting paper on avoiding the overhead of load-store queues in dynamic high-level synthesis. (This paper was also the runner-up best paper).

From my own institution, Oliver Cosgrove, Ally Donaldson and John Wickerson had a great paper on fuzzing FPGA place and route tools, which has led a vendor to fix a bug they uncovered through their tool.

There were many other good papers, but just to mention a couple that I found particularly aligned to my own interests: EdgeSort on the design of line-rate streaming sorters and HACE on extracting CDFGs from RTL were both really interesting to hear presented.

It was great to be reunited with so many international colleagues and to provide my new students Bardia and Ben with the chance to begin their journey of integration into this welcoming community.